As with so many things these days, it starts with clickbait.

"17 tricks to running a digital agency" was, for the most part, the same kind of unremarkable listicle that surfaces on Twitter from time to time. You know the sort - it keeps things light, you chuckle at a few zingers about office-dogs, exposed piping and wall-mounted fixies. You hit retweet and carry on with the day. This time though, there was one section that stood out to me. On naming your agency, it read:

Just pick a random word or two, and go with that

Could it really be that simple? Let's find out.

Striking the right tone. permalink

Random is a pretty strong word.

Think about the web agencies that you admire. The best names might seem random but usually carry some extra weight or depth behind them. This is all perhaps quite obvious, but it pokes an immediate flaw in our meme-ified advice. How can our choice be random, but still retain some deeper meaning?

Fortunately for our soon-to-be-industry-leading web agency, there's a whole branch of machine learning that can help us automate exactly this kind of problem: Natural Language Processing.

An introduction to NLP. permalink

Natural Language Processing, or NLP, is all about training computers to process and understand human languages. At this point you might be thinking that teaching language to humans is already pretty difficult and, honestly, you wouldn't be wrong. NLP is a really dense topic, but building even the smallest NLP applications can feel hugely empowering.

That said, NLP tutorials have a tendency to get very technical, very quickly and they often assume that anyone entering the field is coming armed with a dual-PhD in linguistics and computer science. On top of that, NLP and machine learning are often cushioned by a thick layer of marketing BS that makes it hard to separate reality from building SkyNet. It's clear that NLP is an exciting topic but, for newcomers, all of the above means that it isn't always clear what you might actually want to do with it.

In this tutorial, we're going to start slow and ease into a few core NLP concepts. We'll write a short script to 'borrow' the tone of voice from other agencies that we like. We'll do this by scraping their websites and plucking a list of nouns and adjectives from the text content. Finally, as the original meme suggested, we'll randomly mash some of those words together to generate a few names for our awesome new web agency.

Pre-requisites and setup. permalink

Python is considered to be the de facto place to start for natural language processing and to generate our agency name, we're going to need Python 3.7. We'll also need to manage our dependencies with a Pipfile (for the JavaScript fluent amongst you: think package.json). For that, we're also going to need pipenv.

If you're new to Python, then you can install everything you need with the brilliant Hitchhiker's guide. Once you have installed Python itself, the guide has a handy page explaining how to install pipenv. This tutorial will assume some knowledge of Python syntax, but I'll keep the code as simple as possible.

With everything installed, create a project directory and we're ready to get going.

The Corpus. permalink

Before we can process any text content, we first need to find a suitable a body of text. In NLP terminology this input text is known as our 'corpus'.

In our case, we want to grab our text content from other web agencies that we admire, so we can kick things off by writing a function to grab text content from a remote url. Fortunately the Beautiful Soup package helps us to do this.

Let's install Beautiful Soup, and the HTML5 library to our project dependencies. Open the project directory in your terminal and run:

pipenv install bs4 html5libNext, create a file called main.py with the following contents:

import requests # we'll need this to make requests to URLs

from bs4 import BeautifulSoup, Comment # HTML and comment parsers

def read_urls(urls):

content_string = ''

for page in urls:

print(f'Scraping URL: {page}')

# Synchronous request to page, and store returned markup

scrape = requests.get(page).content

# Parse the returned markup with BeautifulSoup's html5 parser

soup = BeautifulSoup(scrape, "html5lib")

# loop script tags and remove them

for script_tag in soup.find_all('script'):

script_tag.extract()

# loop style tags and remove them

for style_tag in soup.find_all('style'):

style_tag.extract()

# find all comments and remove them

for comment in soup(text=lambda text: isinstance(text, Comment)):

comment.extract()

# Join all the scraped text content

text = ''.join(soup.findAll(text=True))

# Append to previously scraped text

content_string += text

return content_stringYou don't need to worry too much about the specific code of the function above, but it goes as follows: It read a list of URLs, making a request to each before parsing the content with BeautifulSoup. The scraped markup needs to be tidied up a bit though, so next it removes any comments, script or style tags before pulling out the remaining text content and appending it to a string. When every URL in the list has been scraped, the string is returned as our corpus.

Now we have our corpus, we can start to process it.

Lost in SpaCy. permalink

SpaCy bills itself as 'Industrial-strength Natural Language Processing in Python'. Whereas packages like NLTK offer immense amounts of flexibility and configuration, the flipside is a steep learning curve. SpaCy on the other hand, can be set up and ready to go in minutes.

We need to add SpaCy to our project as a dependency. Open your project directory in your terminal and run:

pipenv install spacySpaCy doesn't do much by itself though - it needs a pre-trained language model to work with. Fortunately several are available out of the box. We'll also install the standard english model and save it to our Pipfile:

pipenv install https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-2.2.5/en_core_web_sm-2.2.5.tar.gz With our dependencies installed, let's get back into the code. Open up main.py and add SpaCy to our imports at the top:

import requests # we'll need this to make requests to URLs

from bs4 import BeautifulSoup, Comment # HTML and comment parsers

import en_core_web_sm # SpaCy's english modelNext, underneath our read_urls function, we can define a new function to allow SpaCy to parse the corpus.

def get_tokens(corpus):

# load the pre-trained english model

nlp = en_core_web_sm.load()

# parse the corpus and return the processed data

return nlp(corpus)Notice the name of our function, get_tokens. What's that all about?

Parts of Speech and Tokenization. permalink

Most languages divide their words up into different categories. These usually comprise nouns, verbs, adjectives, adverbs and more. In linguistic terms, these categories are known as 'parts of speech', or POS. This is important to us as, to generate our agency name, we want to single out nouns and adjectives. But how can we get at them?

One place to start would be to break each sentence up into its individual parts but this isn't a straightforward as using String split(). We need a way to intelligently divide our text, whilst retaining the individual purpose of each word. Doing this manually would be too time consuming, so this is where machine learning becomes necessary. Most NLP libraries use trained language data to intelligently scan, compare and identify the characteristics of each word. Once identified, these words are separated out into 'tokens'. This process is known as tokenization and is one of the most important core principles of NLP.

Our get_tokens function tokenizes our corpus. Or, in simpler terms, SpaCy reads over the corpus, splits out individual words and returns a list of tokens. In creating that list, SpaCy also adds a lot of extra information to each extracted word, such as its relationship to other words in the sentence or which part of speech it is. We can use those attributes to filter the list down to find a specific part of speech.

Let's create another function:

def get_word_list(tokens, part_of_speech):

# extract a list of words and capitalise them

list_of_words = [

word.text.capitalize()

for word in tokens

if word.pos_ == part_of_speech

]

# make the list unique by casting it to a set

unique_list = set(list_of_words)

# cast it back to a list and return

return list(unique_list)This function receives the list of tokens, a part of speech code, and then filters the results using a list comprehension (again, for JS developers, a list comprehension is a bit like a cross between Array.map and Array.filter). To break this list comprehension down, it's best to work from the inside out – For every word in our tokens list, we grab word.text and capitalise it, but only if the part of speech (word.pos_) matches our POS code parameter. You can find a full list of these POS codes in the SpaCy POS documentation. If you're curious about what other attributes might be available on each token, you can read the Token reference.

Lastly, we can filter out any duplicated words by casting the results to a set(). We'll need to perform some list actions later though, so it is cast back to a list before being returned.

This is all looking great and we can now use this function to create a list of nouns, adjectives, verbs or adverbs. There's a slight problem though - if you were to use this function as-is, you might find that the list of words you get back is a bit of a mess.

Stop words. permalink

Most languages have a set of core words that occur frequently. In English, this might be words like "the", "at", "from", "in", "for" and so on. Whilst these words are useful for humans, they can quite quickly get in the way extracting meaning with NLP. As a result, it's common to filter them out and these kinds of words are known as stop words.

Our script is no different and the stop words need to go. Whilst we're here, we can also get rid of any rogue punctuation. Fortunately SpaCy tokens have two handy attributes that allow us to do this - the aptly named is_stop and is_punct. Let's update the list comprehension in our get_word_data function:

list_of_words = [

word.text.capitalize()

for word in tokens

if word.pos_ == part_of_speech

and not word.is_stop

and not word.is_punct

]Now we can extract a nice sanitised list of words, but there's a tiny bit more optimisation that we can do.

Lemmatization. permalink

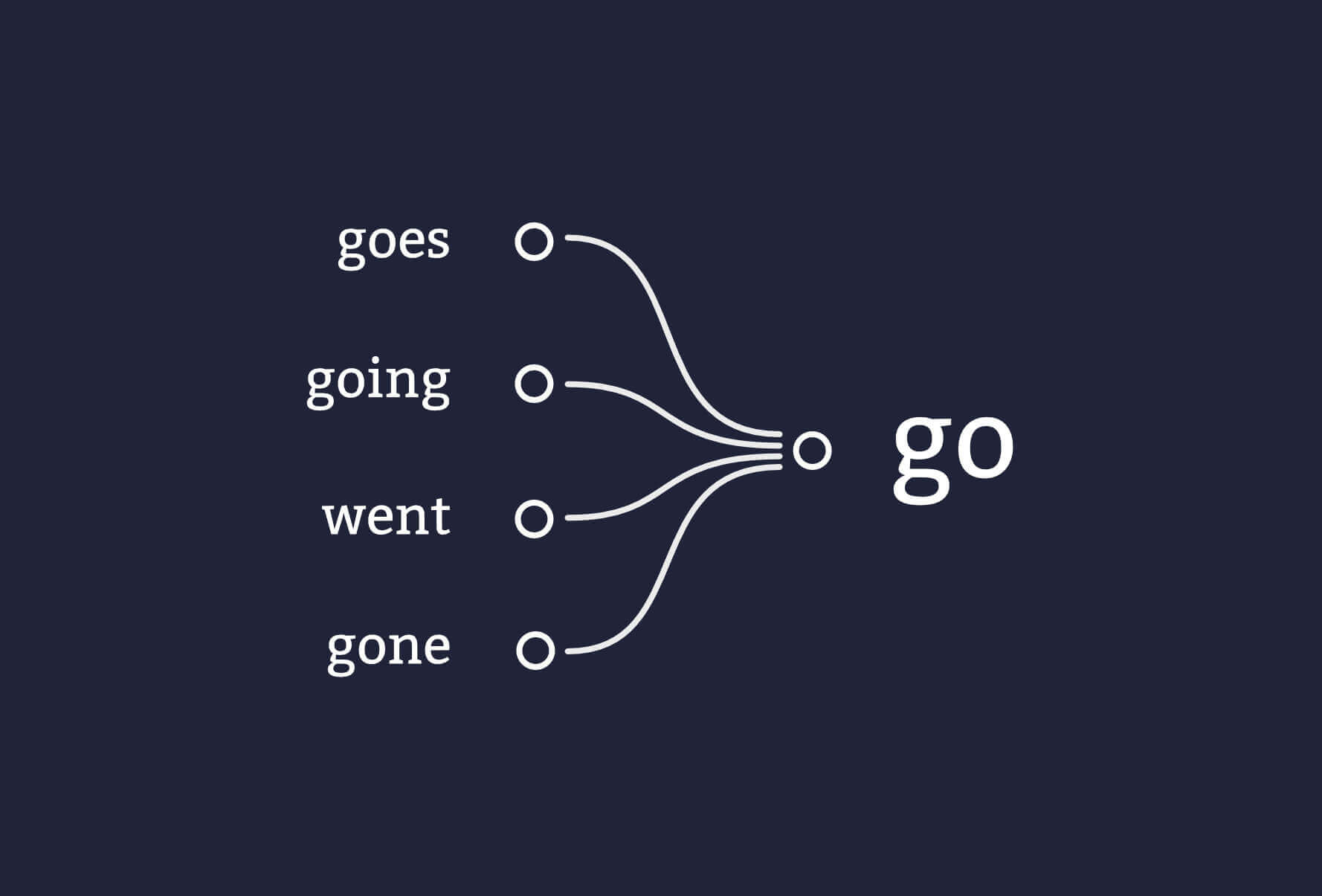

Let's say you've scraped your website content and in amongst your list of tokens are the words "goes", "going", "went" and "gone". That's quite a few words to express the same base idea. We can make this simpler.

These words could all be lumped together under the core concept: "go". In linguistic terms, we call this a word's 'lemma' or the uninflected root form of a word.

The simplest way to think of a lemma is to see it as what you might look for in a dictionary:

Out of the box, SpaCy provides a convenient means to convert any matched term back to its lemma. This is called lemmatization. Let's hone our list of tokens down into a much tighter list of core ideas by updating our list comprehension. We'll change the first line to call .lemma_ instead of .text.

Your final get_word_list function should look like this:

def get_word_list(tokens, part_of_speech):

# extract a list of lemmas and capitalise them

list_of_words = [

word.lemma_.capitalize() # <-- update .text to .lemma_

for word in tokens

if word.pos_ == part_of_speech

and not word.is_stop

and not word.is_punct

]

# make the list unique by casting it to a set

unique_list = set(list_of_words)

# cast it back to a list and return

return list(unique_list)Bringing it all together. permalink

Now we've set up a few functions and we've covered the core concepts, let's pull everything together. To generate the final name, we're going to need to be able to make random selections from our word lists. At the top of main.py, add random to your imports.

import requests # we'll need this to make requests to URLs

from bs4 import BeautifulSoup, Comment # HTML and comment parsers

import en_core_web_sm # SpaCy's english model

import randomAt the end of the file, underneath your get_word_list function, paste the following:

# create a list of urls to scrape

# For illustration, I'm using my website

# but replace with any urls of your choice

CORPUS = read_urls([

'https://robbowen.digital/',

'https://robbowen.digital/work',

'https://robbowen.digital/about'

])

# parse the corpus and tokenize

TOKENS = get_tokens(CORPUS)

# extract a unique list of noun lemmas from the token list

nouns = get_word_list(TOKENS, "NOUN")

# extract a unique list of adjective lemmas from the token list

adjectives = get_word_list(TOKENS, "ADJ")

# create a random list of 500 nouns and adjective-noun combos

# cast to a set to make sure that we only get unique results

list_of_combos = set(

[

# String interpolation of an adjective and a noun...

f'{random.choice(adjectives)} {random.choice(nouns)}'

# 50% of the time...

if random.choice([True, False]) is True

# else return a random noun

else random.choice(nouns)

# and repeat this 500 times

for x in range(500)

]

)

# Write list of combinations to a text file

output_file = open("./output.txt", 'w')

for item in list_of_combos:

output_file.write(f'{item}\r\n')Let's break that code down.

First of all, we pass a list of URLs into our read_urls function and store the result in a constant for later. Be sure to change the example URLs to your own choices here, and remember: the more text on your chosen pages, the better your results will be.

Next, we pass the corpus into our get_tokens function. This is where SpaCy does its magic, tokenizing the corpus and returning a list of data-rich tokens.

To generate our agency name, we want some random nouns. Nouns by themselves aren't much fun, so we'll grab some adjectives too. This uses the get_word_list function we created earlier. The first argument is the list of tokens, and the second filters by a 'part of speech code'.

Now we have our nouns and adjectives lists we can prep our final list of names. We want to generate a list of names – let's say 500 of them. We can use another list comprehension to do this.

Remember the random library we imported above? It gives us access to the random.choice function. As the name implies, it allows us to randomly choose a single item from a list. random.choice also gives us a handy way to embed a 50/50 chance into our comprehension. Here, an if-else means each iteration of our loop will return either an adjective-noun combo or a single noun. Finally, with the comprehension complete, we wrap the list in set() to ensure that we only have unique results.

Ok, everything is ready. At the very end we create a file called output.txt, loop through our list of names and write each to a new line.

And...we're done! If you need to, you can double check your code against the final source on github.

Generate the name. permalink

It's time to fire up the final script and generate some names. At your terminal, run the following command:

pipenv run python main.pyIf all goes well, you should have now a file named output.txt chock full of multi-award-winning agency names.

And the winner is.... permalink

"Just pick a random word or two and go with that". So, after all this, what name did I go with?

Well, I had some real gems: Loaf, Strategic Bear, Rise, Chaotic Rest, Bold Tomorrow. After a few minutes crawling through the output list though, I spotted the one.

Exciting, yet confident. Like a life-raft in a sea of comedic nonsense:

Wrapping up. permalink

Ok, I get it. Like most clickbait, this might not have been a completely satisfying ending. Hopefully though, you've learned a little about the potential application of even the most superficial Natural Language Processing scripts.

To recap, in this introduction, we've used and learned about:

- Corpus: The body of text to be processed

- Parts of speech: The name given to the categories of words comprising nouns, verbs, adjectives etc.

- Tokenization: The act of splitting sentences into individual words, or tokens

- Stop words: Small, frequently occurring words that we do not wish to include in our results i.e. "the", "for", "it" etc.

- Lemmatization: Changing a word back to its root concept, or dictionary form i.e. the lemma of "best" or "better" is "good"

From these core concepts, the possibilities are vast. Imagine going into a pitch having scanned the client's website in advance to profile exactly how they talk about themselves. Or perhaps you could take the jump into sentiment analysis to scan how well received a particular topic is on Twitter. There's still a lot to learn but, with these core building blocks, you're on your way.

If you enjoyed this article and have any ideas on how to extend this example, or if you generated a particularly badass name, then please let me know on Mastodon.

If you're looking to learn more about NLP, I strongly recommend the Advanced NLP with SpaCy course by Ines Montani - it's free, and really rather good.